Notice

Recent Posts

Recent Comments

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 |

Tags

- gs25

- ChatGPT

- 프로그래머스 파이썬

- Git

- SW Expert Academy

- 파이썬

- programmers

- AI 경진대회

- 금융문자분석경진대회

- ubuntu

- 우분투

- PYTHON

- Kaggle

- dacon

- 코로나19

- 편스토랑 우승상품

- 데이콘

- 백준

- Real or Not? NLP with Disaster Tweets

- github

- leetcode

- 캐치카페

- Baekjoon

- 자연어처리

- 더현대서울 맛집

- 프로그래머스

- 맥북

- 편스토랑

- hackerrank

- Docker

Archives

- Today

- Total

솜씨좋은장씨

[Kaggle DAY17]Real or Not? NLP with Disaster Tweets! 본문

Kaggle/Real or Not? NLP with Disaster Tweets

[Kaggle DAY17]Real or Not? NLP with Disaster Tweets!

솜씨좋은장씨 2020. 3. 14. 23:45728x90

반응형

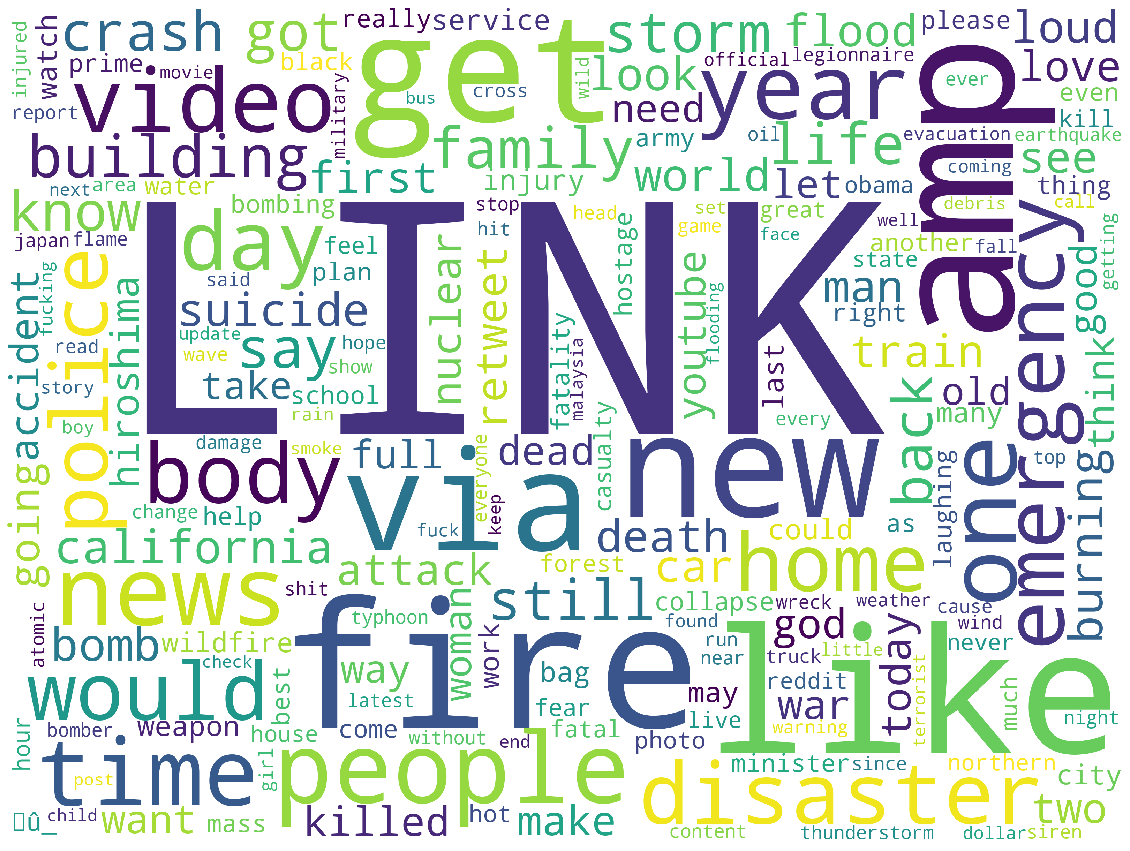

Kaggle 대회 17회차!

오늘은 기존의 데이터 전처리 방식에서

tokenizer를 word_tokenize에서 TreebankWordTokenizer로 변경하고

토큰화 후 stemmer를 사용하지 않고 lemmatizer를 사용하여 보았습니다.

기존의 방식보다 조금 더 원형의 형태를 보존해서 토큰화가 된 것을 볼 수 있었습니다.

모델은 16회차의 모델에 그대로 적용해보았습니다.

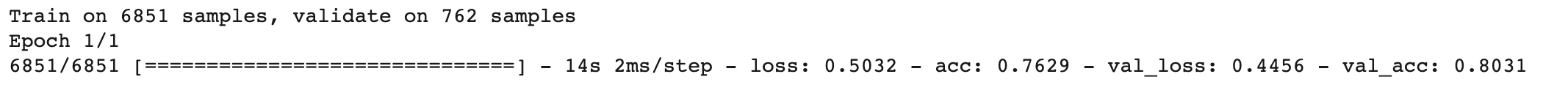

첫번째 제출

from keras import optimizers

adam = optimizers.Adam(lr=0.05, decay=0.1)

model_1 = Sequential()

model_1.add(Embedding(vocab_size, 100))

model_1.add(GRU(32))

model_1.add(Dropout(0.5))

model_1.add(Dense(2, activation='sigmoid'))

model_1.compile(loss='binary_crossentropy', optimizer=adam, metrics=['acc'])

history = model_1.fit(x_train, y_train, batch_size=32, epochs=1, validation_split=0.1)

predict = model_1.predict(x_test)

predict_labels = np.argmax(predict, axis=1)

for i in range(len(predict_labels)):

predict_labels[i] = predict_labels[i]

ids = list(test['id'])

submission_dic = {"id":ids, "target":predict_labels}

submission_df = pd.DataFrame(submission_dic)

submission_df.to_csv("kaggle_day17.csv", index=False)결과

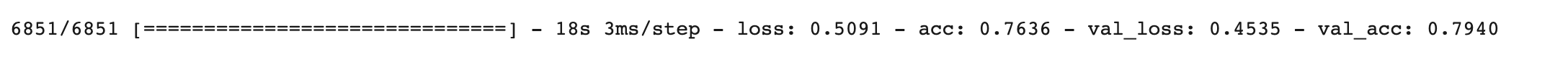

두번째 제출

adam1 = optimizers.Adam(lr=0.03, decay=0.1)

model_2 = Sequential()

model_2.add(Embedding(vocab_size, 100))

model_2.add(GRU(32))

model_2.add(Dropout(0.5))

model_2.add(Dense(2, activation='sigmoid'))

model_2.compile(loss='binary_crossentropy', optimizer=adam1, metrics=['acc'])

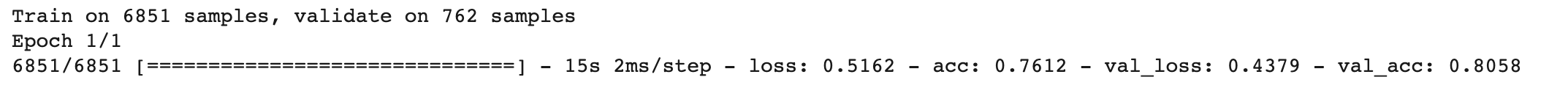

history = model_2.fit(x_train, y_train, batch_size=20, epochs=1, validation_split=0.1)

결과

predict = model_2.predict(x_test)

predict_labels = np.argmax(predict, axis=1)

for i in range(len(predict_labels)):

predict_labels[i] = predict_labels[i]

ids = list(test['id'])

submission_dic = {"id":ids, "target":predict_labels}

submission_df = pd.DataFrame(submission_dic)

submission_df.to_csv("kaggle_day17_2.csv", index=False)

세번째 제출

adam2 = optimizers.Adam(lr=0.05, decay=0.1)

model_3 = Sequential()

model_3.add(Embedding(vocab_size, 100))

model_3.add(GRU(32))

model_3.add(Dropout(0.5))

model_3.add(Dense(2, activation='sigmoid'))

model_3.compile(loss='binary_crossentropy', optimizer=adam2, metrics=['acc'])

history = model_3.fit(x_train, y_train, batch_size=16, epochs=1, validation_split=0.1)

predict = model_3.predict(x_test)

predict_labels = np.argmax(predict, axis=1)

for i in range(len(predict_labels)):

predict_labels[i] = predict_labels[i]

ids = list(test['id'])

submission_dic = {"id":ids, "target":predict_labels}

submission_df = pd.DataFrame(submission_dic)

submission_df.to_csv("kaggle_day17_3.csv", index=False)결과

네번째 제출

adam3 = optimizers.Adam(lr=0.05, decay=0.1)

model_4 = Sequential()

model_4.add(Embedding(vocab_size, 100))

model_4.add(GRU(32))

model_4.add(Dropout(0.5))

model_4.add(Dense(2, activation='sigmoid'))

model_4.compile(loss='binary_crossentropy', optimizer=adam3, metrics=['acc'])

history = model_4.fit(x_train, y_train, batch_size=20, epochs=1, validation_split=0.1)

predict = model_4.predict(x_test)

predict_labels = np.argmax(predict, axis=1)

for i in range(len(predict_labels)):

predict_labels[i] = predict_labels[i]

ids = list(test['id'])

submission_dic = {"id":ids, "target":predict_labels}

submission_df = pd.DataFrame(submission_dic)

submission_df.to_csv("kaggle_day17_4.csv", index=False)결과

다섯번째 제출

adam4 = optimizers.Adam(lr=0.05, decay=0.1)

model_5 = Sequential()

model_5.add(Embedding(vocab_size, 100))

model_5.add(GRU(32))

model_5.add(Dropout(0.5))

model_5.add(Dense(2, activation='sigmoid'))

model_5.compile(loss='binary_crossentropy', optimizer=adam4, metrics=['acc'])

history = model_5.fit(x_train, y_train, batch_size=28, epochs=1, validation_split=0.1)

predict = model_5.predict(x_test)

predict_labels = np.argmax(predict, axis=1)

for i in range(len(predict_labels)):

predict_labels[i] = predict_labels[i]

ids = list(test['id'])

submission_dic = {"id":ids, "target":predict_labels}

submission_df = pd.DataFrame(submission_dic)

submission_df.to_csv("kaggle_day17_5.csv", index=False)결과

다 제출하고 포스팅하는 과정에서 제출할 결과를 만드는 test데이터에

lemmatizer를 사용하지않고 한 것을 발견하여 내일은 lemmatizer를 활용하고 제출을 해보려고합니다.

읽어주셔서 감사합니다.

'Kaggle > Real or Not? NLP with Disaster Tweets' 카테고리의 다른 글

| [Kaggle DAY19]Real or Not? NLP with Disaster Tweets! (0) | 2020.03.17 |

|---|---|

| [Kaggle DAY18]Real or Not? NLP with Disaster Tweets! (0) | 2020.03.15 |

| [Kaggle DAY16]Real or Not? NLP with Disaster Tweets! (0) | 2020.03.13 |

| [Kaggle DAY15]Real or Not? NLP with Disaster Tweets! (0) | 2020.03.12 |

| [Kaggle DAY14]Real or Not? NLP with Disaster Tweets! (0) | 2020.03.11 |

Comments